How Much a Memory Cost: An Insight Into the Value of Your Memories Through Apple's New "Journal" Feature

In today's technology-driven world, your intimate thoughts are worth their weight in gold.

collage I created to convey the concept of “how much a memory cost”, a title that’s a play on kendrick lamar’s ‘how much a dollar cost’. all images acquired from pinterest

I’ve had a journal since I was about twelve years old. Then, I called it a diary where I penned down thoughts on the book I was reading at the time, what I wanted for Christmas, and Taylor Swift song lyrics that I loved. As I transitioned into adolescence, life grew in complexity as did my thoughts, experiences, and feelings. At eighteen, my diary transformed into a journal that held all the intimate details of my first heartbreak, my wishes for college, and Warsan Shire quotes. Now, my journal holds within it the life force that brings me back to myself. My journal is the closest anyone can get to me without me ever having to utter a word. I jot down ideas, memories, dilemmas, and streams of consciousness, finding answers even when I’m not in active reach for them. My journaled self is my archival self.

Apple’s New Journal Feature

Apple recently introduced a new journal feature for iPhone users. The application is automatically installed onto your phone once you upgrade to iOS 17.2. From a user experience (UX) perspective, the application is aesthetically pleasing and navigable. It offers distinct journaling methods that I’d classify into these three categories:

Photo or activity-based prompt journaling:

Here, Apple suggests journaling prompts for you based on either photographs in your gallery depicting recent experiences or memories, or location data showcasing a place you visited recently.

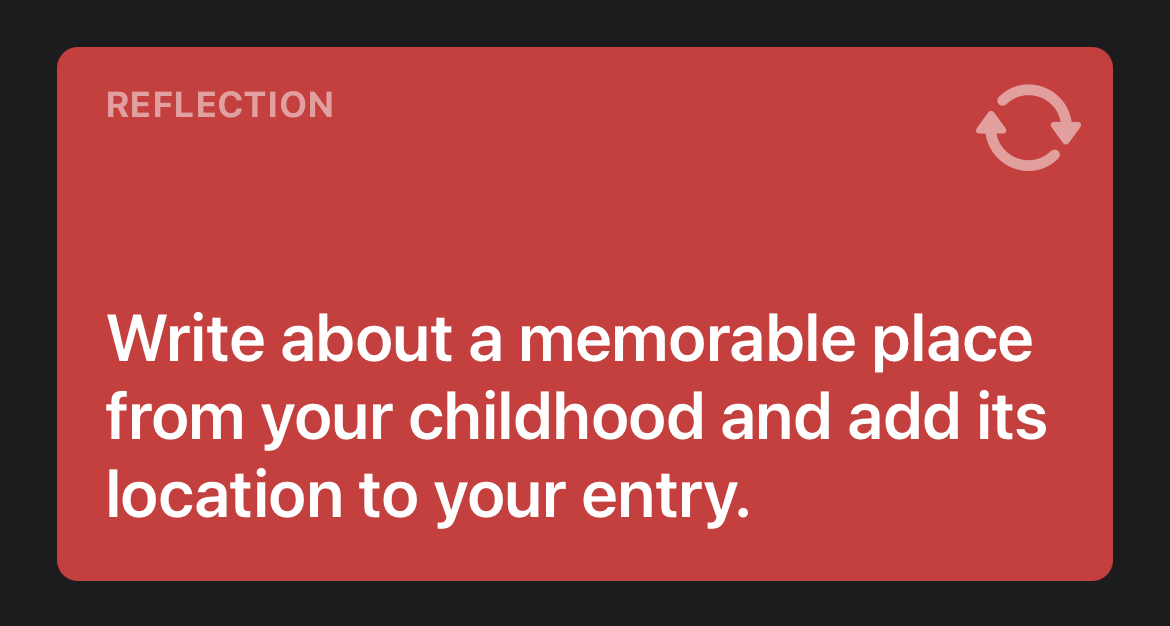

Sentence-based prompt journaling:

Here, Apple suggests sentence-based journal prompts such as, “What is your favorite thing about yourself?”Individual entry, non-prompt-based journaling

Here, there are no specified prompts given by Apple but rather the user is free to journal whatever they desire and add additional media.

To these entries, a user can add photos, audio, and locations allowing for an elevated, comprehensive journaling experience.

Intimate Data

As seen above, the Journal app provides elaborate journaling prompts - allowing a user to delve into the intricate details of their day that they may otherwise have forgotten. While in traditional pen-and-paper journaling, a user may choose to focus on a singular, stand-alone aspect of their day, the journal app does the work of “remembering” for the user. What may have been a mundane task, likely to be forgotten by the user is brought up by the Journal app, allowing them to reminisce on, gather, and record their thoughts and feelings about it.

On the surface, the Journal feature is an excellent gateway into journaling for those new to the practice and an enriching one for seasoned journalers. However, my brief interactions with it have raised critical questions on data privacy and data protection. The nature of data being collected by Apple through the journal app can best be classified as intimate data. Intimate data refers to personal and highly sensitive information about an individual’s private life, relationships, health, or personal preferences. Unlike the data gathered by tracking cookies on websites which only really gather insights on your search history, shopping preferences, and geographical location, intimate data opens up a whole new world of access to the individual. The Journal app keeps track of my phone calls, the places I frequent, my physical fitness activity, and even the podcasts I listen to.

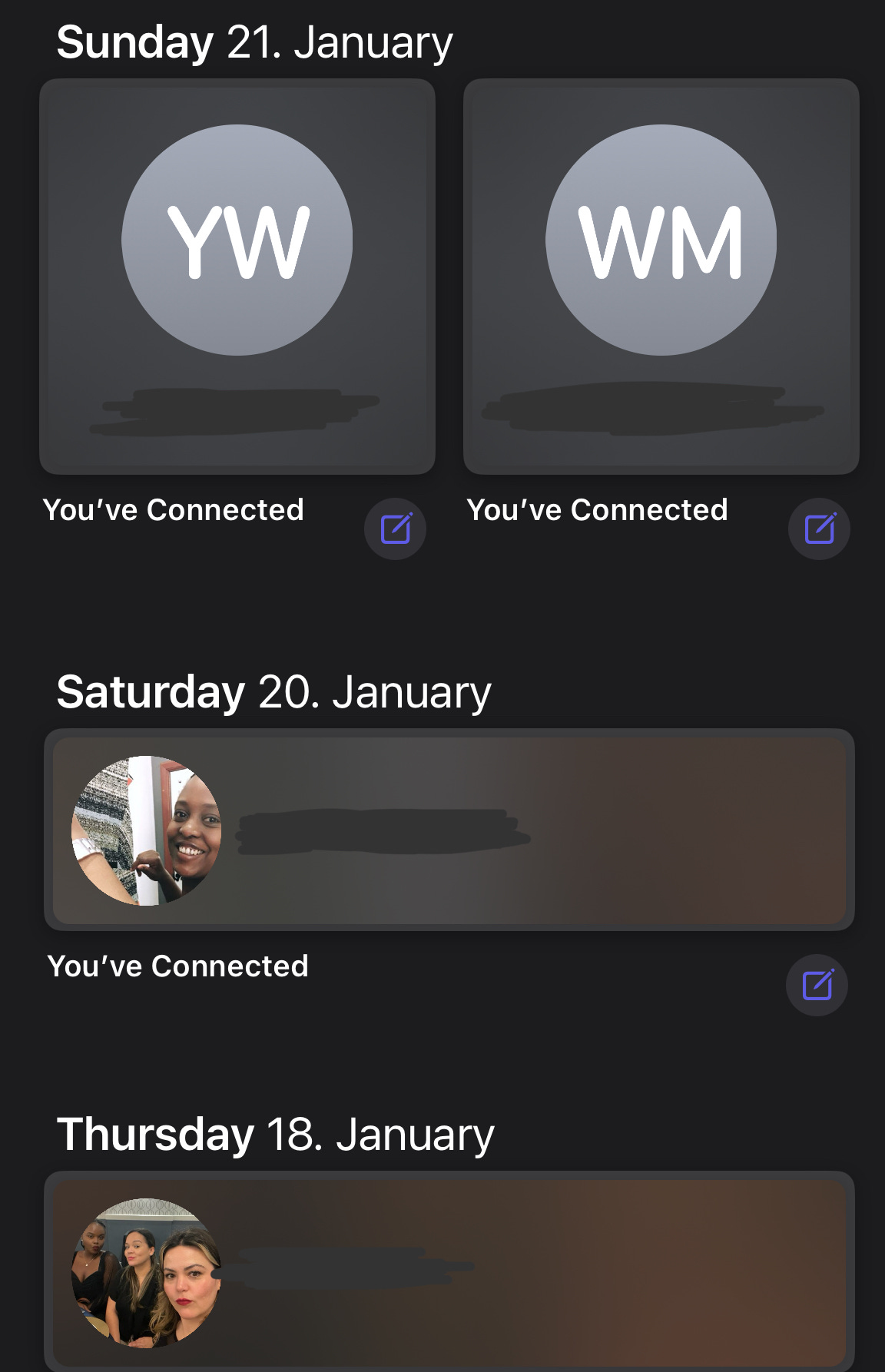

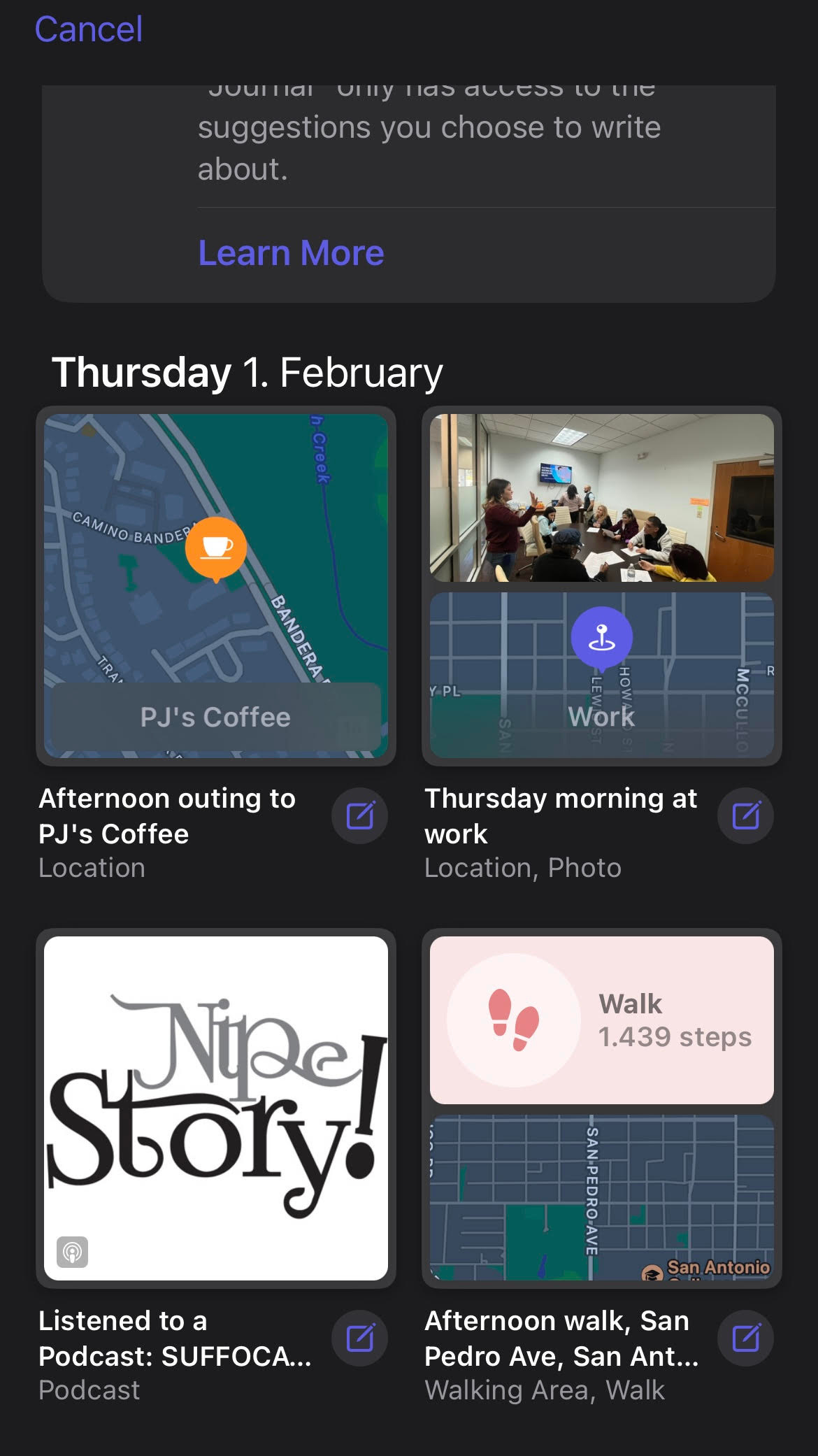

a screenshot of my “recent” tab in the Journal app showcasing the phone calls I had that week

a screenshot of the “recent” tab in my Journal app showcasing the day’s activities

An apt example to illustrate the difference between the data gathered from tracking cookies vs intimate data is this: Tracking cookies on a consumer website could track my location as Texas, my number of clicks on the website as 10 per minute, and my shopping preference as discounted items. Despite this being a large amount of information, it has little to nothing to do with who I am as a person. This type of data, behavioral data, is largely impersonal and easily interchangeable between users. On the other hand, intimate data gives an in-depth insight into the individual. Moreover, it provides the “why” behind the behavior.

While behavioral data merely indicates that user x engages in behavior y, intimate data reveals that user x's behavior y is influenced by factor z. The x —> y relationship prompts data analysts to seek correlations to understand user behavior. However, the x —> y (due to z) relationship not only facilitates the identification of correlated behaviors but also unveils causal relationships, enabling a deeper understanding of user behavior.

To demonstrate, let's explore a scenario where a user's behavioral data indicates a regular purchase pattern of two teddy bears annually, typically around the same dates each year. Delving into intimate data reveals a deeper narrative. It could showcase that the reason the user purchases those teddy bears around those specific times is that they serve as memorabilia for a loved one who passed away. They could also reveal in their journal that the deceased loved one is a parent and that the specific dates are their birthday and death anniversary. They then further state that they donate the teddy bears to a charitable foundation that their deceased parent used to donate to. Where only three data points existed then (user, purchase item, and purchase date), five exist now (user, purchase item, purchase date, purchase reason, and purchase final destination).

image showcasing the x —> y vs x—> y (because z) relationship

Now with a clear grasp of the value of intimate data, we can move to the intentionality of the journal app. The user interface (UI) places high emphasis on using Apple’s pre-designed photo-based and sentence-based prompts through the colors, images, and locations displayed. By intentionally nudging a user towards their prompts, Apple gets you to not only jot down your intimate thoughts but also steers you towards writing only specific thoughts and reflections. X (Twitter) user Kristen Ruby put it best when she posed the question:

“what if you want to write a journal entry about your new Dog - but AI prompts you to reflect on your voting choices? Do you see why this experiment could go horribly wrong?”

Now as you reflect on a certain prompt and jot down your thoughts on the app, millions of other users are probably doing the same. This mass participation enables Apple to amass a vast repository of intimate data on shared topics, essentially constructing an unimaginably extensive database that encapsulates the intimate reflections of countless individuals.

Apple and Data Privacy

It is no secret that big tech corporations track and sell user data with giants like Google facing legal action for selling privacy data obtained from users browsing in incognito mode. Apple has carefully crafted its reputation around prioritizing user data privacy in a bid to set itself apart from its fellow tech behemoths. The organization offers a security feature called Advanced Data Protection, which employs end-to-end encryption for messages, photos, and documents stored on Apple's cloud platform, iCloud. This encryption protocol extends to the Journal application, reassuring users that all data remains private and inaccessible even to Apple.

History, however, dictates a discrepancy with Apple having faced up to four class action lawsuits for alleged data privacy violations despite users clicking the ‘do not share my information’ button. Reading between the lines shows that Apple’s protections pertain to sharing data with third-party applications but not first-party applications, meaning that they do keep and can use your data for their first-party applications e.g. the App Store, Apple Music, Apple TV, Stocks, and Books. The legitimacy of this is, however, up for debate.

Machine Learning and Intimate Data

As mentioned severally in this article, intimate data represents a paradigm shift with its uses potentially extending far beyond those of behavioral data. While behavioral data is primarily used for business analytics and subsequent targeted advertising, intimate data could be used both for the latter and machine learning (ML). ML is the subset of artificial intelligence (AI) that allows machines to imitate human behavior.

To understand how machine learning models are trained, we need to understand the machine learning lifecycle. Broadly speaking, there are 5 distinct stages of building an ML model before its deployment:

Data Collection and Assembly: Gathering relevant datasets for analysis.

Data Pre-Processing: Cleaning, transforming, and preparing raw data for analysis.

Data Exploration and Visualization: Examining and understanding data patterns and characteristics through visualizations.

Model Building: Creating and training the machine learning model.

Model Evaluation: Assessing the performance and effectiveness of the trained model against predefined metrics.

an image showcasing the stages of building an ML model pre-deployment, highlighting the pre-processing stage. image from v7 labs.

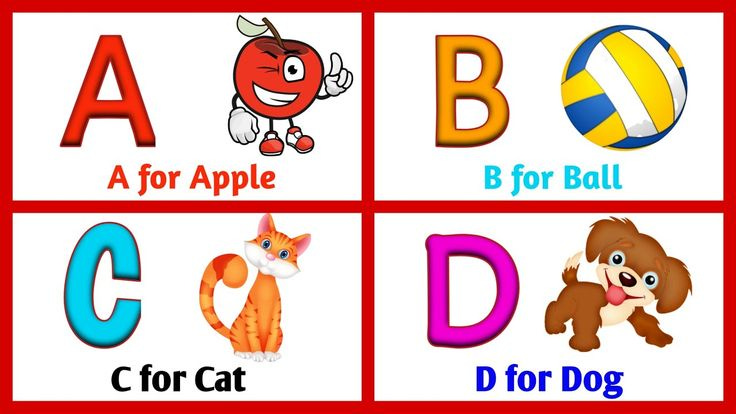

For this case, I would like to zero into the data pre-processing stage. During pre-processing, data is cleaned by removing null and redundant values, and inconsistent data points. Data labeling, a crucial part of the process, also occurs here. Data labeling is described as annotating data to indicate a target that you want a model to predict. The process closely mimics how children are taught to read and make sense of the world. As a child, you were presented with a colorful chart with different letters of the alphabet and corresponding items. Here you learned to make the association between the letter “a”, the word “apple”, and an image of an apple. You did this iteratively until you understood it such that when someone pointed at the picture of an apple on the learning chart you identified the letter in question as “a”. In ML, meticulous attention to the pre-processing stage is paramount. This step lays the foundation for effective model training, much like how a child's understanding of the world is largely shaped by their foundational knowledge of letters and words.

a children’s learning chart showcasing the abc’s. image from pinterest.

So Why Should You Care?

Data annotation is an onerous process that requires hundreds of hours of human labor to accurately identify and classify media. These hours of laborious work make it time-consuming and expensive for a company to build an ML model. According to my analysis, here is where the Journal app steps in. After selecting your journaling prompt, typically accompanied by images, you proceed to craft a detailed journal entry. This involves delving into not just the visual elements depicted but also your emotions and reflections associated with the prompt. Journaling, in this way, becomes a “cheat code” for data labeling.

While data annotators would still have to pre-process your data, the labeling time is significantly reduced as there already exists a user-generated, written description of the media. In addition to describing what was happening in the images, the user incorporates the aspect of intimate thought and opinion; creating a gold mine for training data. The subjective nature of data labeling begins to decrease because rather than a stranger trying to explain what is happening in an image, the person who captured the image, who was at the scene of action, describes it; leaving little to no room for misinterpretation.

If one journal user could produce tens of data points per journal entry, imagine how much new data is being generated en masse; and how valuable it is for training new and existing ML algorithms.

A mass increase in available data marked with a corresponding decrease in data-labeling times simply allows for the accelerated development of Frontier AI models. As is, these powerful models pose a regulatory challenge. However, empowering them further with intimate data propels them even closer to human-like operation. This, as you can imagine, seeks to exacerbate already existing challenges in the AI world such as ethical challenges, biased systems, misinformation, and the AI arms race. As corporations rush to profit from the AI boom, their concerns become less about ethics and more about amassing large amounts of data to build models regardless of the privacy and ethical concerns involved. If we, as a collective, consistently express concern around the rapid proliferation of AI, then what good does it do us to voluntarily offer up our most intimate data to these companies? Rather than having the corporations scrap and mine our data to be able to use it, we are voluntarily giving it up under the guise of “wellness”. There is a poignant Swahili saying that explains this predicament, “mbuzi kajileta kichinjio” which translates to “the goat has brought itself to the slaughterhouse”. In many ways, we unknowingly aid in our detriment.

Policy Interventions

Since the Journal app is still in its infancy, much of the speculation surrounding it may be just that - speculation. As it stands, the app's existence and subsequent data gathering have yet to exhibit any instances of data privacy violations simply because users opted in to its use. Should Apple be found selling the data to third parties, then it will be imperative to make a case for stringent regulation because of the nature of the data. However, for anyone interested in what global data privacy laws exist to safeguard personal data, check out these:

What do you think about the Journal app? Do you use it? Do you like it? I’d love to hear your thoughts in the comments.

Always a great read!

Another question to ask is, should we be proactive in asking Apple about the usage of the data? If Apple is to train models using the data, asking them to be more stringent after the fact won’t change the training weights of the models. The models will already have captured crucial information that is hard to remove even if we remove the data after the training.